Abstract

Deep learning has seen rapid growth in recent years and achieved state-of-the-art performance in a wide range of applications. However, training models typically requires expensive and time-consuming collection of large quantities of labeled data. This is particularly true within the scope of medical imaging analysis (MIA), where data are limited and labels are expensive to be acquired. Thus, label-efficient deep learning methods are developed to make comprehensive use of the labeled data as well as the abundance of unlabeled and weak-labeled data. In this survey, we extensively investigated over 300 recent papers to provide a comprehensive overview of recent progress on label-efficient learning strategies in MIA. We first present the background of label-efficient learning and categorize the approaches into different schemes. Next, we examine the current state-of-the-art methods in detail through each scheme. Specifically, we provide an in-depth investigation, covering not only canonical semi-supervised, self-supervised, and multi-instance learning schemes, but also recently emerged active and annotation-efficient learning strategies. Moreover, as a comprehensive contribution to the field, this survey not only elucidates the commonalities and unique features of the surveyed methods but also presents a detailed analysis of the current challenges in the field and suggests potential avenues for future research.

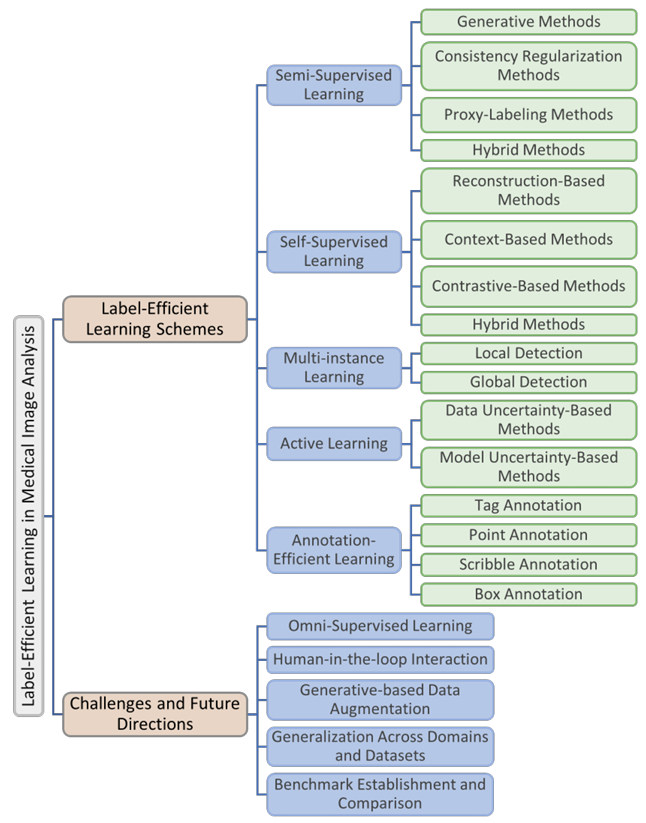

Outline of the Proposed Survey

Graphical Abstract of Each Scheme

-

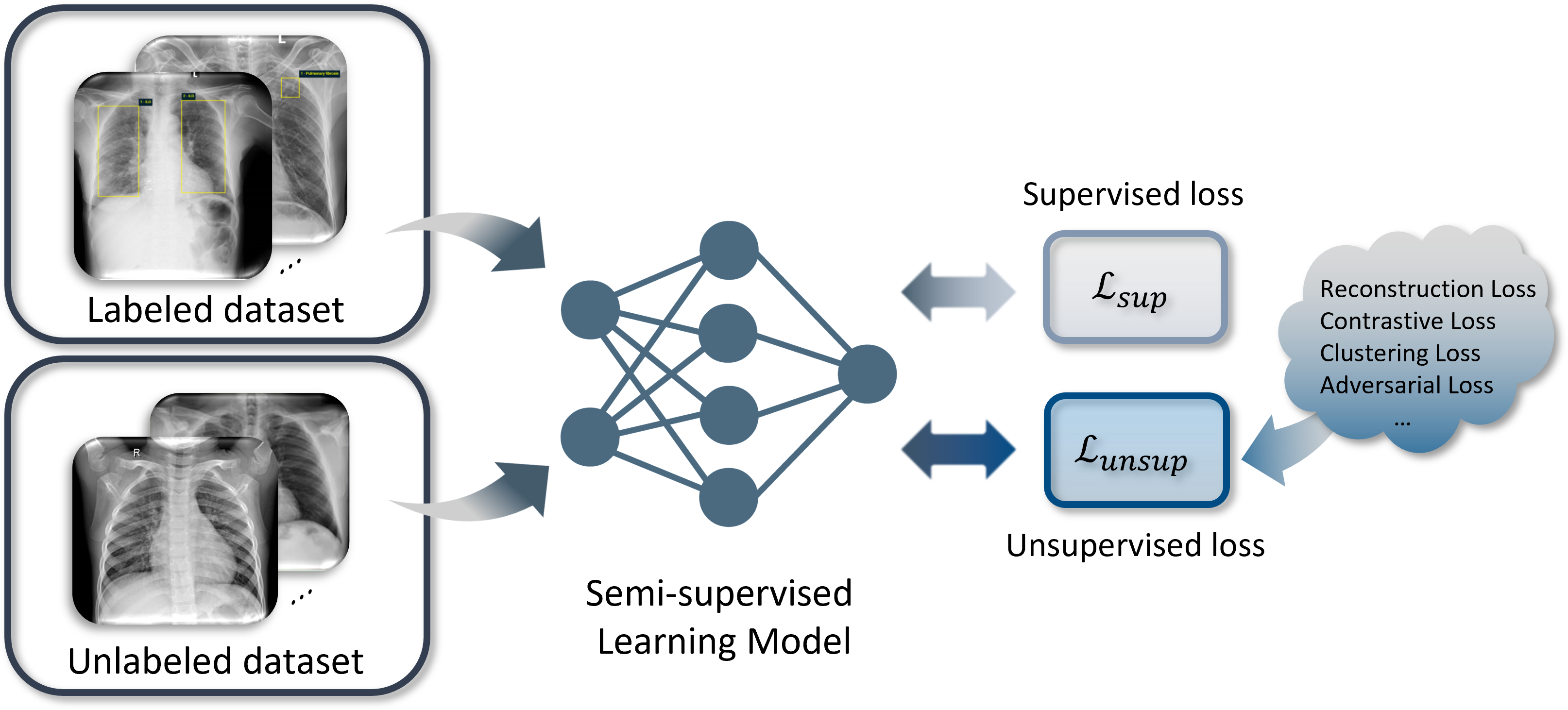

Semi-Supervised Learning (Semi-SL)

-

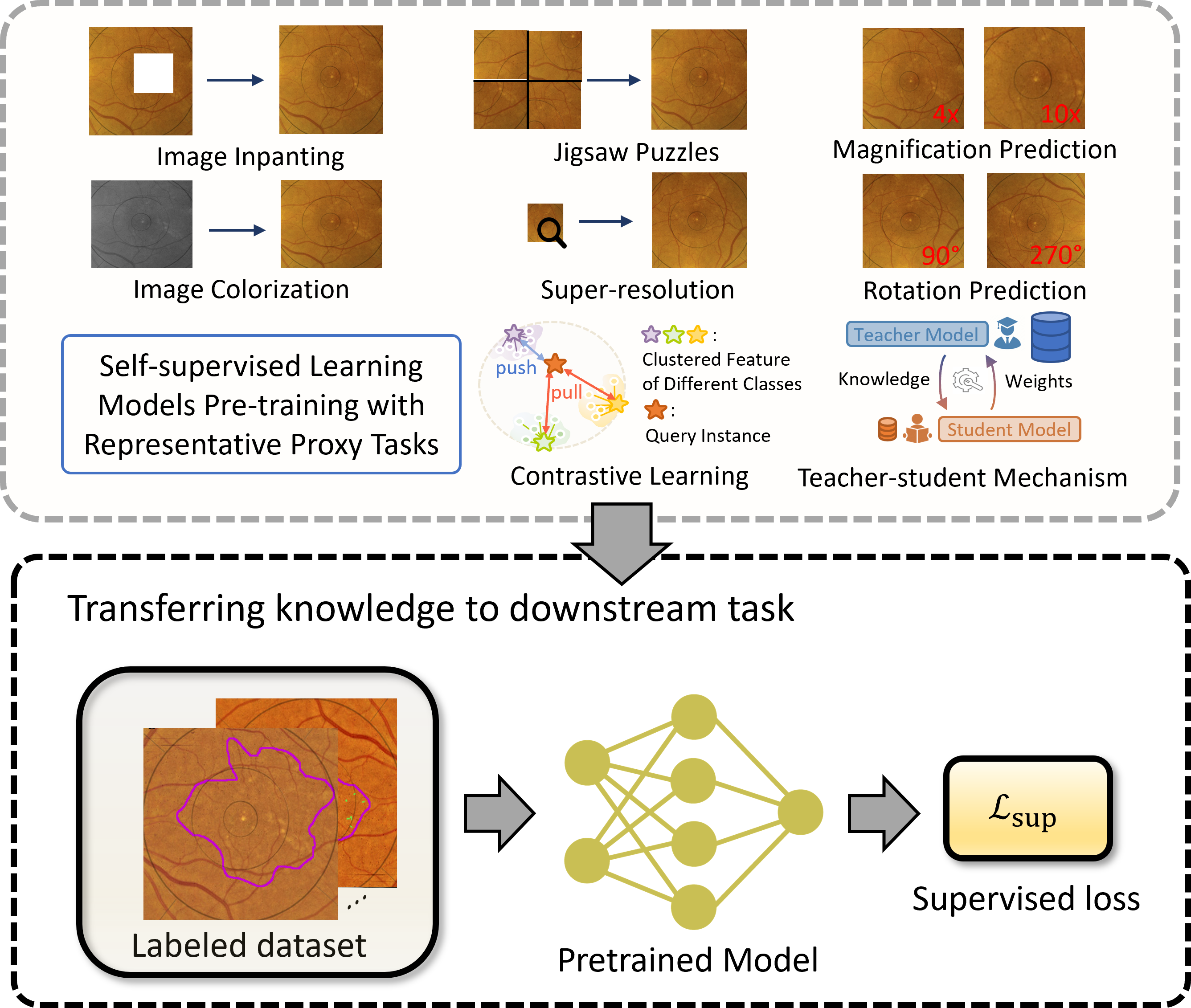

Self-Supervised Learning (Self-SL)

-

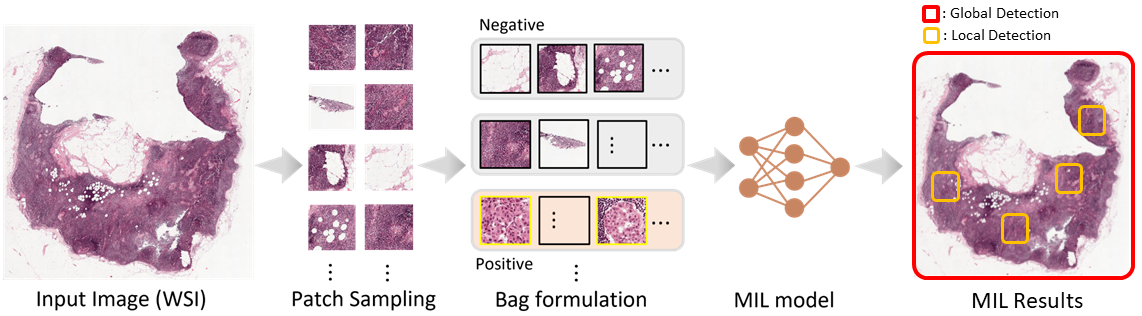

Multi-instance Learning (MIL)

-

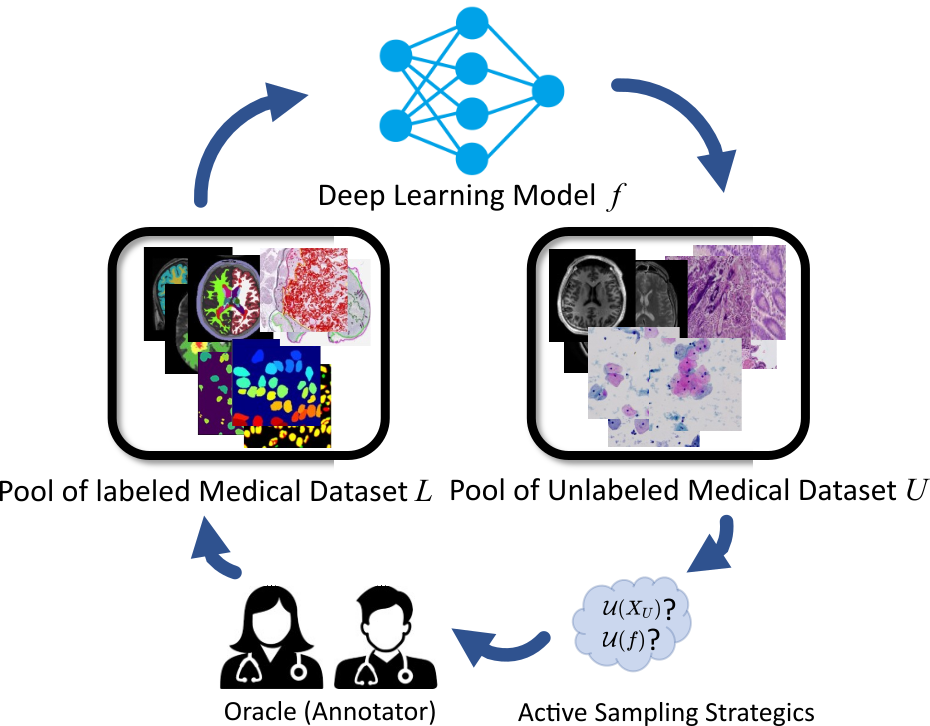

Active Learning (AL)

-

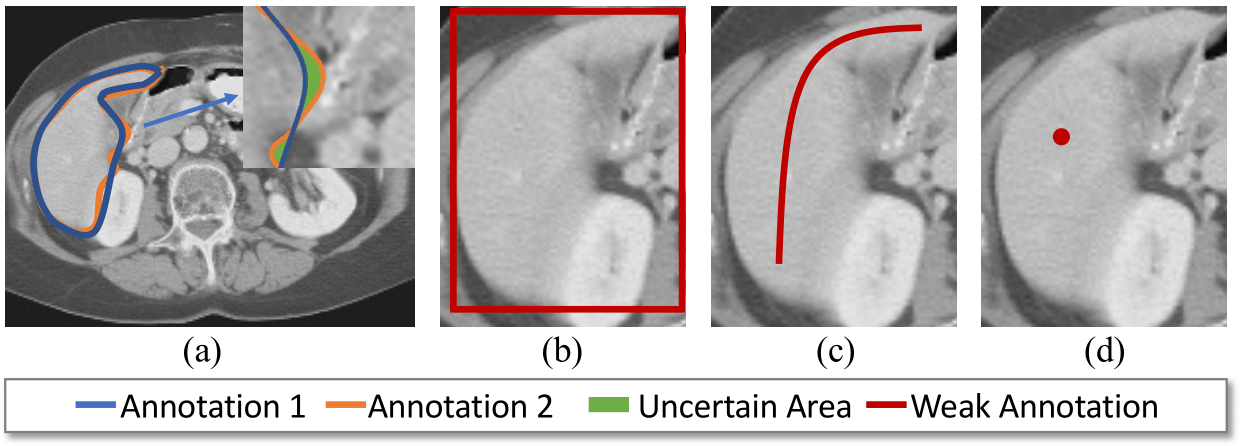

Annotation-Efficient Learning (AEL)

For the technical details, please refer to the original paper.

Downloads

Arxiv Preprint: click here

Reference

@article{jin2023labelefficient,

title={Label-Efficient Deep Learning in Medical Image Analysis: Challenges and Future Directions},

author={Jin, Cheng and Guo, Zhengrui and Lin, Yi and Luo, Luyang and Chen, Hao},

journal={arXiv preprint arXiv:2303.12484},

year={2023}

}